Kubernetes Workload Sizing: What We Have and What We Need

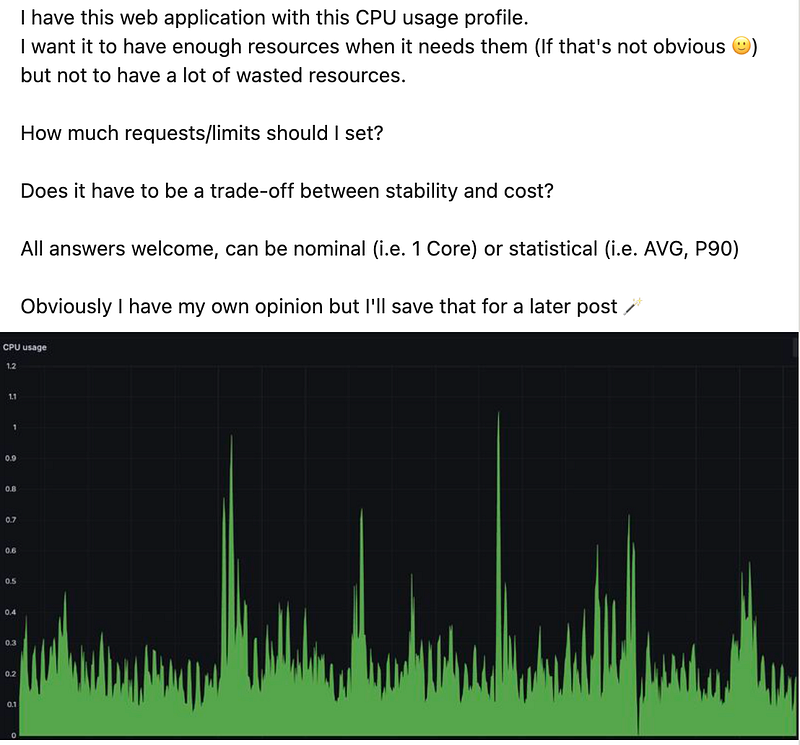

I recently posted a LinkedIn challenge called “Size me this workload”

Starting with a baseline environment that already has Karpenter and KEDA implemented, I got some great answers!

Many suggested “KEDA should do the trick,” “Use VPA,” or “Configure a percentile depending on your SLA.”

But nothing bulletproof.

Even after implementing Karpenter and KEDA, we still need to manage our workload requests to make them as optimal as possible.

In fact, improved bin-packing by these solutions increases the criticality, as prior to bin-packing we had more wasted resources acting as an unintentional safety net.

When we don’t have proper workload definitions, Pods from the same logical workload (like Spark jobs or CI/CD runners) are treated as independent entities. This means:

Without runtime awareness, we treat all containers the same, ignoring that different runtimes have fundamentally different resource behaviors:

Take JVM as an example: by default, it allocates 25% of its available memory (limits) to heap space. When we lower the limit, the heap space shrinks proportionally, which can trigger Java out-of-memory kills.

Setting limits without understanding these behaviors can lead to unexpected OOMKills or degraded performance.

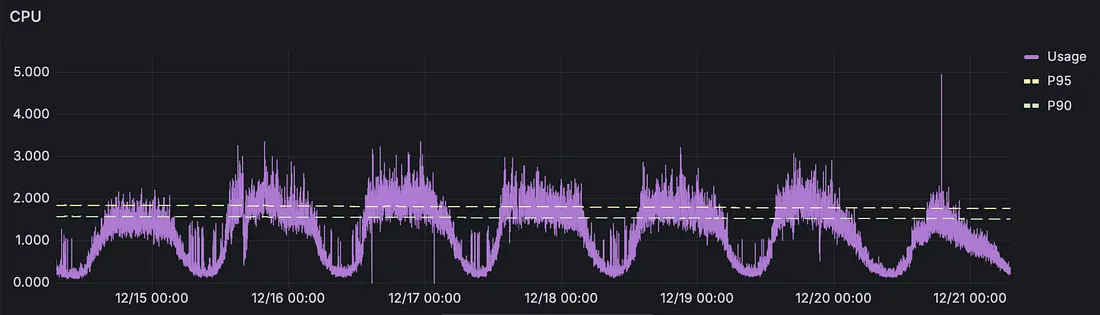

Traditional sizing tools force us to pick a percentile e.g. P90, P95, P99 without understanding what happens during the remaining percentage of time or how severe those peaks actually are (the next evolution of guesswork!).

This creates a double-edged problem:

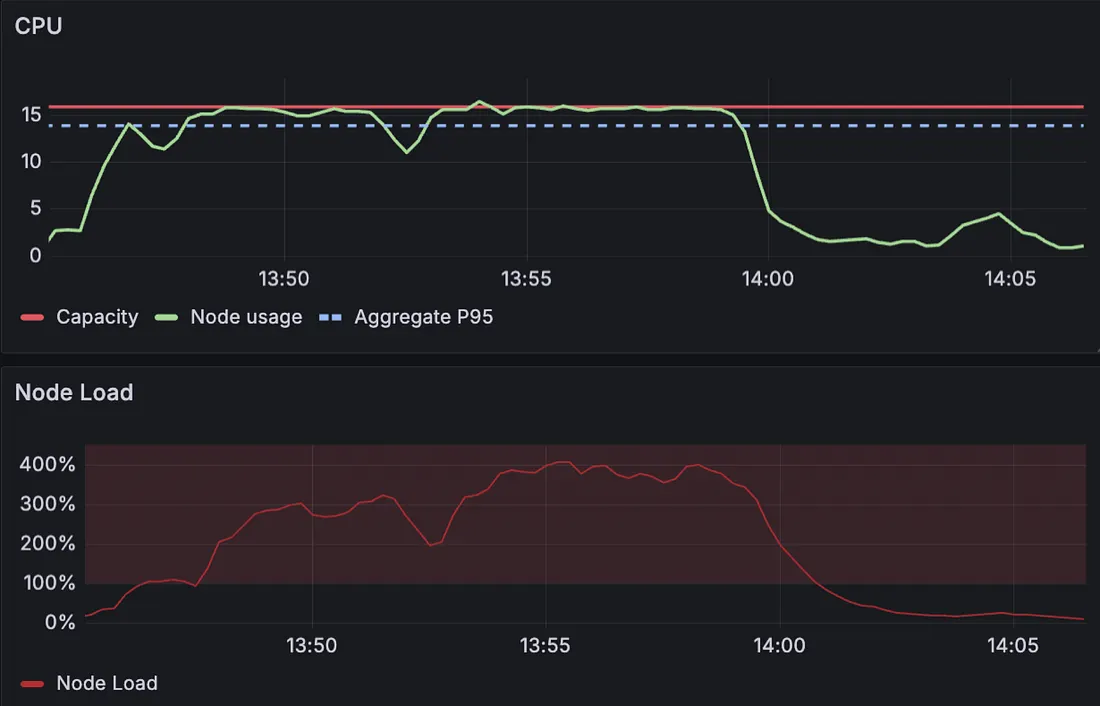

Even worse, If the peak is way higher than the baseline, I’m in serious trouble.

This is especially problematic because peaks often correlate with business-critical moments — exactly when we can least afford instability. We’re making financial and stability decisions based on incomplete information about both typical usage and burst patterns.

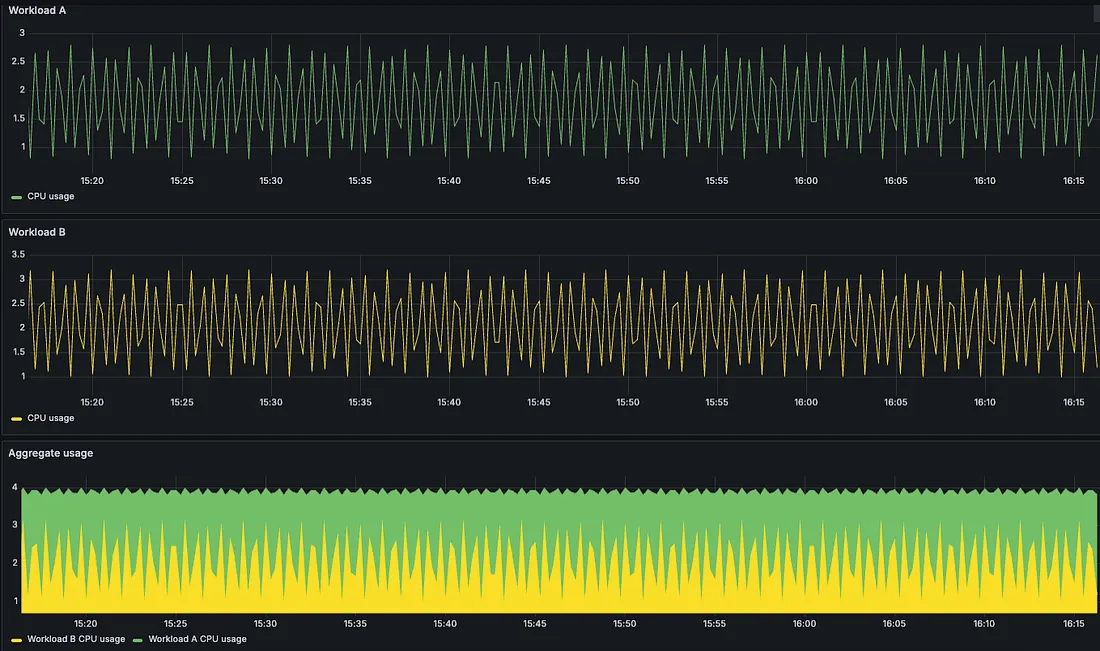

Optimizing each workload in isolation ignores the reality that they share infrastructure. With this in mind:

These problems compound on each other. A lack of context in one area forces conservative decisions in others, leading to a cascading effect of waste and risk throughout the cluster.

We need a system that knows how to group these Pods together to understand and define how the workload behaves.

We need a system that can understand different runtimes and how they interact with the sizing configurations and size accordingly.

We need to be Node-centric, calculating how workloads perform as a group rather than individually. This lets us identify when workloads peak together and when they have oscillating patterns.

As for workloads with oscillating patterns, we may not need to allocate for peak usage at all, since individual usage patterns can complement one another which compounds with economy of scale.

Kubernetes workload sizing remains a challenge even with tools like VPA, and is amplified by Karpenter and KEDA.

Current solutions rely on simple percentile-based approaches that don’t account for critical factors such as workload bursts, runtime behaviors and node-level resource dynamics.

To truly optimize resource allocation, we need context-aware systems that understand workload definitions across different controllers, recognize runtime-specific requirements, account for burst patterns that occur during peak demand, and take a node-centric approach that considers how workloads interact as a group rather than in isolation.

This shift from guesswork to intelligent, context-driven sizing is essential for balancing stability and cost-efficiency in Kubernetes environments and is exactly what we are building at Wand Cloud, If you’d like to see how this works for your environment, schedule a demo to explore optimization without the guesswork.